Sure, here's how you can structure it in HTML and translate the text to simplified Chinese:

```html

MOE & MOA for Large Language Models

MOE & MOA for Large Language Models

MOE 和 MOA 用于大型语言模型。

``` In the above HTML: - `` tag is used for the heading. - `

` tag is used for the paragraph text. And the translation of "MOE & MOA for Large Language Models" into simplified Chinese is "MOE 和 MOA 用于大型语言模型".

Sure, here's how you can structure it in HTML and translate the text to simplified Chinese: ```html

寻求专家小组的建议

``` In simplified Chinese: "寻求专家小组的建议" This HTML snippet maintains the structure while displaying the translated text in simplified Chinese.

To translate the given English text into simplified Chinese while keeping the HTML structure, you can use the following format: ```html

大型语言模型(LLMs)无疑已经席卷了科技行业。它们的迅猛崛起得益于来自维基百科、网页、书籍、大量研究论文以及我们所钟爱的社交媒体平台用户内容的大量数据。这些数据和计算密集型模型已经急切地整合了来自音频和视频库的多模态数据,并且利用数万台英伟达GPU进行数月的训练,以培训最先进的模型(SOTA)。所有这些都让我们想知道这种指数级增长是否能够持续。

``` This HTML snippet preserves the original structure of the text while providing the Chinese translation within `` tags for paragraph formatting.

Sure, here is the text translated into simplified Chinese while keeping the HTML structure: ```html

这些LLM面临的挑战不少,让我们在这里探讨其中一些。

``` In this translation: - "这些LLM" translates to "these LLMs". - "面临的挑战不少" translates to "facing numerous challenges". - "让我们在这里探讨其中一些" translates to "let's investigate a few here".- Certainly! Here's the translated text in simplified Chinese, keeping the HTML structure: ```html 成本和可扩展性:更大的模型可能需要花费数千万美元来训练和提供服务,这成为日常应用广泛采用的障碍。(参见GPT-4训练成本) ``` In this translation: - "成本和可扩展性" corresponds to "Cost and Scalability". - "数千万美元" means "tens of millions of dollars". - "训练和提供服务" translates to "train and serve". - "日常应用" refers to "day-to-day applications". - "GPT-4训练成本" stands for "Cost of training GPT-4".

- Certainly! Here's the HTML structure with the translated text in simplified Chinese:

```html

训练数据饱和:公开可用的数据集很快就会用尽,可能需要依赖缓慢生成的用户内容。只有那些拥有稳定新内容来源的公司和机构才能生成改进。

``` In this HTML snippet: - `` tags are used to enclose the paragraph of translated text. - The translated text in simplified Chinese is inserted between the opening `

` tag (`

`) and the closing `

` tag. This preserves the HTML structure while providing the translated text. - Certainly! Here's the text translated into simplified Chinese, while maintaining the HTML structure:

```html

幻觉:

生成虚假和未经证实信息的模型将会成为一种威慑力量,用户在敏感应用之前希望从权威来源获取验证。

``` This HTML structure preserves the original content in Chinese and maintains clarity in presentation. - Certainly! Here's the HTML structure with the translated text in simplified Chinese:

```html

探索未知:LLM(大语言模型)现在被用于超出其原本意图的应用。例如,在游戏玩法、科学发现和气候建模方面,LLM展现了出色的能力。我们将需要新的方法来解决这些复杂情况。

``` In this HTML snippet: - `` denotes a paragraph tag for the text content. - The text is translated into simplified Chinese and placed inside the `

` tag.

Certainly! Here's the translated text in simplified Chinese, keeping the HTML structure: ```html

在我们开始过多担忧未来之前,让我们来看看人工智能研究人员如何不知疲倦地努力工作,以确保持续进步。混合专家(MoE)和混合代理(MoA)的创新显示出希望正在逐渐显现。

``` This HTML snippet contains the translated text while maintaining the basic structure for web content.Sure, here's the translated text in simplified Chinese, keeping the HTML structure intact: ```html

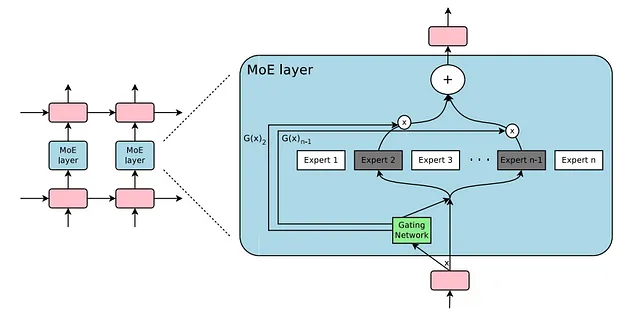

首次引入于2017年,《专家混合技术》展示了多个专家和一个能够选择稀疏专家集的门控网络,可以在较低的计算成本下产生显著改进的结果。门控决策可以关闭网络的大部分部分,实现条件计算,专业化提升了语言建模和机器翻译任务的性能。

``` This HTML snippet contains the translated text in simplified Chinese within a `` (paragraph) tag, suitable for embedding in a webpage while preserving the structure.

Sure! Here’s the translated text while keeping the HTML structure: ```html

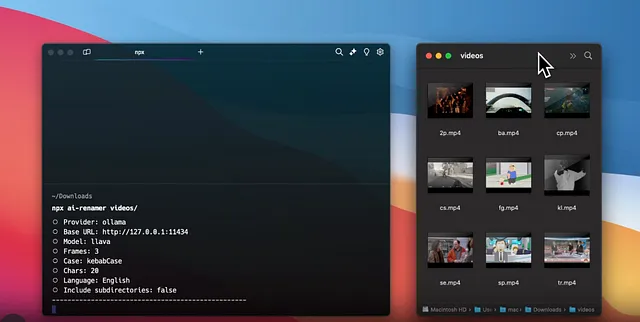

上面的图显示了在递归神经网络中集成了一个专家混合层。门控层仅激活两个专家来执行任务,然后将它们的输出组合在一起。

```Certainly! Here's the text translated into simplified Chinese while keeping the HTML structure: ```html

尽管这仅在某些基准测试中得到展示,条件计算已经打开了一条道路,使得持续改进成为可能,而无需依赖不断增长的模型尺寸。

``` This HTML snippet contains the translated text: 尽管这仅在某些基准测试中得到展示,条件计算已经打开了一条道路,使得持续改进成为可能,而无需依赖不断增长的模型尺寸。Certainly! Here's the HTML structure with the translated text in simplified Chinese: ```html

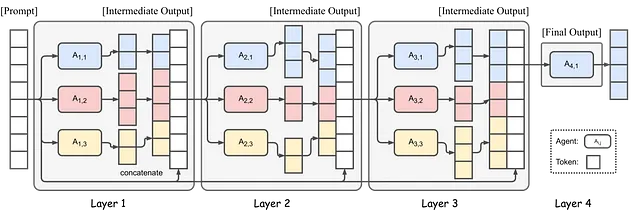

灵感来自于MOE,混合代理技术利用多个LLM来改善结果。问题通过多个LLM(也称为代理)路由,每个阶段增强结果。作者已经证明,与更大的SOTA模型相比,它利用较小的模型产生更好的结果。

``` Translated text: 灵感来自于MOE,混合代理技术利用多个LLM来改善结果。问题通过多个LLM(也称为代理)路由,每个阶段增强结果。作者已经证明,与更大的SOTA模型相比,它利用较小的模型产生更好的结果。

Certainly! Here's the translated text in simplified Chinese while keeping the HTML structure intact: ```html

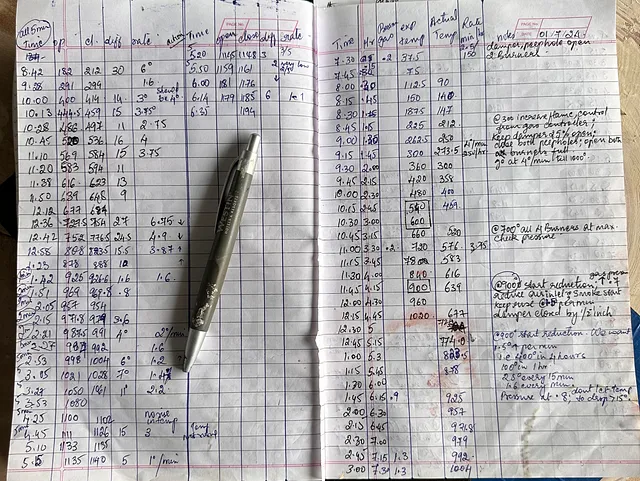

该图显示了4个混合代理层,每层有3个代理。为每一层选择合适的LLM非常重要,以确保良好的协作和生成高质量的响应。(来源)

``` In Chinese: ```html该图显示了4个混合代理层,每层有3个代理。为每一层选择合适的LLM非常重要,以确保良好的协作和生成高质量的响应。(来源)

```Sure, here's the HTML structure with the translated text in simplified Chinese: ```html

MOA依赖于一个事实,即多语言模型联合工作可以产生更好的输出,因为它们可以结合其他模型的响应。LLM的角色分为生成多样化输出的提议者和能够将它们组合成高质量响应的聚合器。多阶段方法可能会增加首令牌时间(TTFT),因此需要开发缓解方法,使其适用于广泛的应用场景。

``` In the above HTML snippet: - `` tags are used to denote a paragraph for the translated text. - The text has been translated into simplified Chinese and inserted between the `

` opening and closing tags.

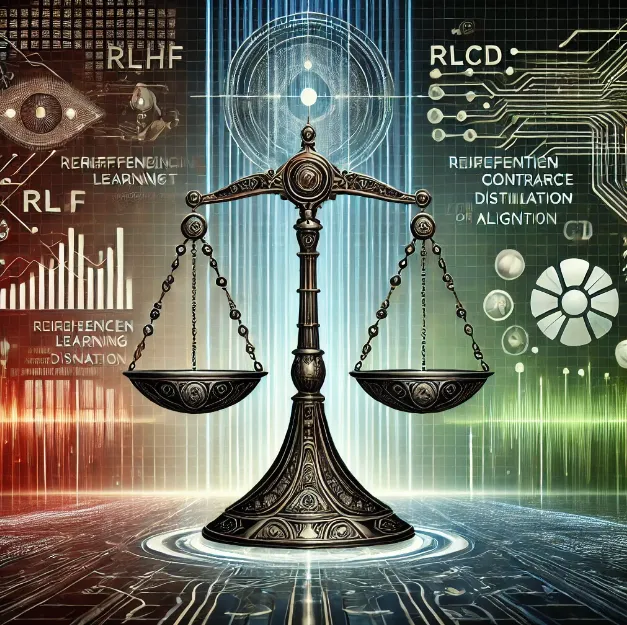

Certainly! Here's the translation of your text into simplified Chinese, while keeping the HTML structure: ```html MOE(教育部)和 MOA(农业部)具有类似的基础要素,但表现不同。MOE 的工作是基于挑选一组专家来完成任务的概念,其中门控网络的任务是选择正确的专家组。MOA 的工作是团队在前一团队的工作基础上建设,并在每个阶段改善结果。 ``` This HTML structure maintains the integrity of the text while displaying it in simplified Chinese.

Certainly! Here's the text translated into simplified Chinese, while keeping the HTML structure intact: ```html

MOE(多输出模型)和MOA(多任务学习)的创新开辟了一条创新之路,在这条路上,通过专业组件或模型的结合、协作和信息交换,即使模型参数和训练数据集的线性扩展不再简单,仍然能够持续提供更好的结果。

``` This HTML structure preserves the content while providing the simplified Chinese translation of the original English text.Certainly! Here's the translated text in simplified Chinese, keeping the HTML structure intact: ```html

虽然只有事后我们才能知道LLM创新是否能够持久,但我一直在关注这一领域的研究,以获取见解。看到大学和研究机构所取得的成果,我对即将到来的事物非常乐观。我觉得我们只是为新能力和应用的大潮所做的准备工作。我们不知道它们会是什么,但可以相当确定未来的日子定会给我们带来惊喜。

``` This HTML snippet will display the translated text in simplified Chinese while maintaining the structure for easy integration into web pages or documents.Certainly! Here's the translation in simplified Chinese, keeping the HTML structure: ```html

“我们往往高估了技术在短期内的影响,并低估了长期内的影响。” - 阿玛拉定律

``` This HTML snippet contains the translated text: "We tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run." attributed to "Amara’s Law" in simplified Chinese.```html

参考文献

```To translate the given citation into simplified Chinese and keep the HTML structure, you would format it like this: ```html [1] 王静,王静,Athiwaratkun,B.,张春,邹军 (2024)。混合智能体增强大语言模型的能力。arXiv [Preprint]。https://arxiv.org/abs/2406.04692 ``` In this HTML structure: - `[1]` is kept outside the HTML tags as it's not part of the citation itself. - The citation details are enclosed within `` tags to maintain structure. Here’s how it appears: ```html [1] 王静,王静,Athiwaratkun,B.,张春,邹军 (2024)。混合智能体增强大语言模型的能力。arXiv [Preprint]。https://arxiv.org/abs/2406.04692 ``` This maintains the citation format while providing the translated text in simplified Chinese.

Certainly! Here's the translated text in simplified Chinese, keeping the HTML structure intact: ```html [2] Shazeer, N., Mirhoseini, A., Maziarz, K., Davis, A., Le, Q., Hinton, G., & Dean, J. (2017). 极大规模神经网络:稀疏门控专家混合层。arXiv 预印本 arXiv:1701.06538. ``` In simplified Chinese: ``` [2] Shazeer, N., Mirhoseini, A., Maziarz, K., Davis, A., Le, Q., Hinton, G., & Dean, J. (2017). 极大规模神经网络:稀疏门控专家混合层。arXiv 预印本 arXiv:1701.06538. ``` This maintains the citation format while providing the translation of the title and details in Chinese.