Sure, here's how you can write "Evaluating RAG capabilities of Small Language Models" in simplified Chinese while keeping the HTML structure: ```html 评估小型语言模型的RAG能力 ``` This HTML snippet maintains the structure while providing the translated text in simplified Chinese.

Sure, here is the text translated into simplified Chinese while keeping the HTML structure: ```html 由Lazaro Hurtado和吴雪 ``` This HTML code maintains the structure and specifies that the text inside the `` tag is in simplified Chinese (`zh-CN`).

```html

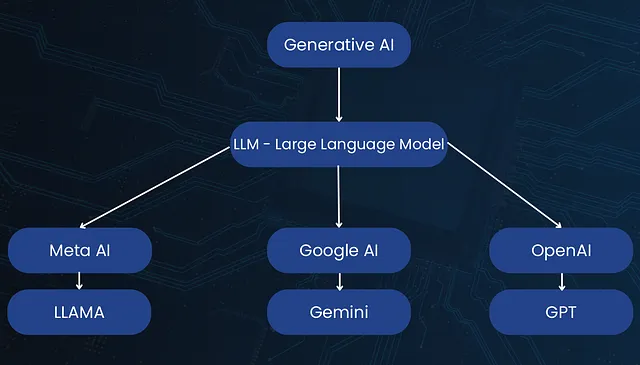

大型语言模型(LLMs)是强大的深度学习模型,能够为诸如问答、总结或情感分析等任务生成高质量的响应[1]。因此,LLMs是GitHub Copilot、Microsoft Copilot和ChatGPT等合作者和聊天机器人应用的支柱。然而,需要注意的是,这些应用及其他类似应用不仅依赖于模型本身的内在能力来提供卓越的用户体验;相反,它们通过将相关信息注入上下文窗口来增强驱动基于证据的结果。这就是在创建这些类型的应用时使用检索增强生成(RAG)系统的主要思想。

``` This HTML snippet contains the translated text in simplified Chinese, preserving the structure as requested.在本文中,我们专注于小型语言模型(SLMs),这些模型基于与LLMs相同的架构,但参数数量较少。我们的主要目标是评估SLMs在回答用户查询时的表现,当给定一个增强的提示时,类似于在RAG系统中观察到的情况。我们实验的目的是衡量在RAG或聊天机器人应用中使用SLM的可行性,这些模型在计算效率和环境友好性方面更具优势,而不是LLM。

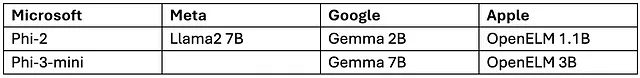

To translate the English text "Throughout this article we refer to SLMs as the family of models that are near the 7-billion parameter count. We therefore consider the following models, as well as their fine-tuned variants, when running our experiment:" into simplified Chinese while keeping the HTML structure, you can use the following translation: ```html 在本文中,我们将SLM(大型语言模型)称为参数接近70亿的模型家族。因此,在进行实验时,我们考虑以下模型及其精调变体: ``` This translation maintains the original meaning and structure of the English sentence while being appropriate for a technical or academic context, as typically found in articles or research papers.

Sure, here is the translated text in simplified Chinese, while maintaining the HTML structure: ```html 我们还定义RAG为模型输入的参数化,其中包括用户的查询和一些检索到的媒体,而在我们的情况下,这些媒体是文档。基于RAG的应用还采用了几种技术,如分块、向量嵌入相似性搜索和重新排序,以获得更好的结果。在我们的实验中,我们假设所有这些都已经事先完成,并且我们正在使用之前参数化过的提示进行工作。 ``` This translation keeps the original structure and meaning while rendering the text in simplified Chinese.

Sure, here's how you can represent "Needle-In-A-Haystack" in simplified Chinese within an HTML structure: ```html 大海捞针 ``` In this HTML snippet: - `` indicates that the content inside is in Chinese. - "大海捞针" (Dàhǎi lāo zhēn) is the simplified Chinese translation of "Needle-In-A-Haystack".

Sure, here's the translation: 描述

```html

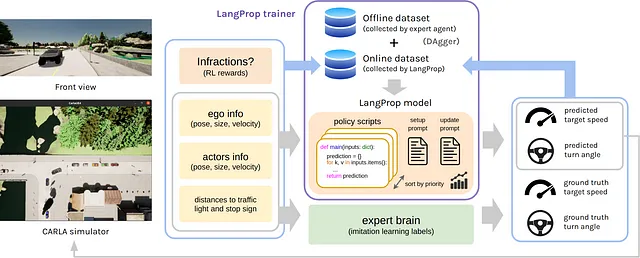

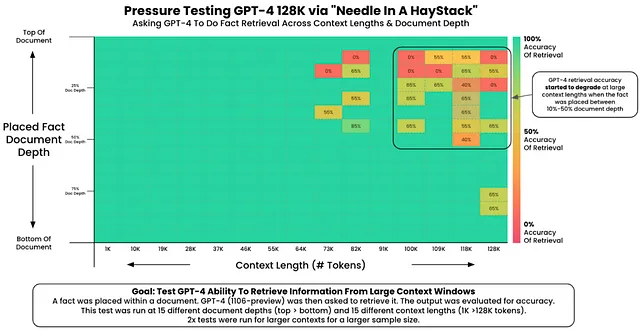

Needle-In-A-Haystack(NIAH)[2] 评估测试包括将一段信息(针)插入到文档(干草垛)中,并通过询问与之相关的问题来评估模型召回插入信息的能力。这使得它成为评估模型在RAG或内存系统中性能的良好测试平台。

```Sure, here's how you can write "How NIAH works" in simplified Chinese, while keeping the HTML structure intact: ```html 如何使用NIAH ``` This translates directly to "How to use NIAH" in English.

To translate the given text into simplified Chinese while keeping the HTML structure, you would use the following: ```html

NIAH 的工作原理是基于 (context_length, document_depth) 对创建提示。其中,文档深度可以在 0 到 100% 范围内变化,而上下文长度可以高达模型的最大上下文长度。提示是通过将一个句子(针)嵌入到某个文档(草堆)中,使得总的标记数不超过 context_length 而创建的。一个检索问题,涉及到嵌入的句子,是最终提示的一部分。语言模型的目标是在文档中找到嵌入的句子以成功地回答这个问题。为了评估模型的响应,我们使用 GPT-4 1106-preview [3] 并要求它根据以下标准,将响应与嵌入的句子(地面真相)在 1 到 10 的尺度上进行评分:

``` This HTML snippet contains the translated text in simplified Chinese, wrapped in a `` (paragraph) tag to maintain structure.

Here is the translated text in simplified Chinese while keeping the HTML structure: ```html

例如,假设我们的“针”和“干草堆”如下所示:

``` This HTML snippet maintains the structure while providing the translation of "For example, suppose we have the following as our needle and haystack:"Sure, here's the translation of the text into simplified Chinese while keeping the HTML structure: ```html 针对这个问题:“在旧金山做的最好的事情就是坐在多洛雷斯公园里。” ``` This HTML snippet maintains the structure while providing the translation.

To translate "Haystack: Microsoft_quarterly_earnings.txt" to simplified Chinese while keeping the HTML structure, you can use the following: ```html Haystack: Microsoft_quarterly_earnings.txt ``` This HTML snippet ensures that the text "Haystack: Microsoft_quarterly_earnings.txt" is displayed in simplified Chinese, with the language attribute (`lang="zh-CN"`) indicating the language to the browser for proper handling of text rendering and pronunciation in speech synthesis systems.

Sure, here's the translated text in simplified Chinese while keeping the HTML structure intact: ```html

问题:“在旧金山做什么最好?”

```Sure, here is the translated text in simplified Chinese: ```html

假设我们将从上下文长度为500到1024和文档深度为0到100%的范围内进行考虑,使用线性间隔算法来分成35个区间。从这个线性间隔中,让我们考虑(700, 38)这一对。首先,我们选择干草垛的一部分,使其具有700 - 词数(needle) - 词数(question)个令牌,然后将"38%"的needle嵌入修剪后的干草垛中。最后,我们将问题附加在末尾,并使用这个最终提示来查询模型。一旦模型生成了响应,我们将使用GPT-4来评估它。

``` This HTML snippet maintains the structure while presenting the text in simplified Chinese.

Certainly! In simplified Chinese, "Setup" would be translated as "设置".

在保持HTML结构的情况下,将以下英文文本翻译为简体中文: 我们实验中所有的模型都可以通过HuggingFace免费访问,唯一的限制是评估器是GPT-4,需要使用OpenAI或Azure OpenAI API密钥。所有模型使用的生成配置如下:

To keep the HTML structure intact while translating the text to simplified Chinese, you can use the following format: ```html {"temperature": 0.7, ``` Here's the translated text in simplified Chinese: ```html {"temperature": 0.7, ``` Make sure to maintain the structure and punctuation as per your original requirement.

Sure, here is the translation of "do_sample": True, in simplified Chinese, keeping the HTML structure: ```html "do_sample": 真, ``` This preserves the structure while providing the translation.

“repetition_penalty”: 1.3,

Sure, here's the translation of "max_new_tokens": 100, into simplified Chinese while keeping the HTML structure:

```html

“max_new_tokens”: 100,

```

Translated to simplified Chinese:

```html

“max_new_tokens”: 100,

```

In HTML, text content within `` tags is typically displayed in a monospaced font, which is suitable for displaying code snippets.

Sure, here's the text translated into simplified Chinese while keeping HTML structure: ```html “use_cache”: True} ``` In simplified Chinese characters: ```html “使用缓存”: True} ``` This maintains the original HTML formatting as requested.

由于 Microsoft 的 Phi-2 模型是一个未经调整的基础模型,我们决定在我们实验进行时使用 HuggingFace 上最受欢迎的 Fine-tuned Phi-2 模型。这导致我们使用了 cognitivecomputations 的 dolphin-2_6-phi-2 [4],我们将其称为 Phi-2 经过调整的模型。我们选择了点赞数量作为决定指标,因为它更好地展示了对模型的满意程度,而不是下载次数,除非受到 cognitivecomputations 社区成员的偏见影响。(另一方面,Microsoft 的 Phi-3-mini 模型只作为经过调整的变体提供。)

Sure, here's the translation in simplified Chinese while keeping the HTML structure: ```html 对于每个模型,最小上下文长度为500个标记,最大长度设定为2048个标记。我们测试的大多数模型的完整上下文长度都大于2048个标记,但我们并不专注于对模型进行压力测试,而是仅关心模型的检索能力。因此,我们认为2048个标记是我们模型系列中足够用于测试的最小上下文长度。 ``` This HTML structure ensures the text remains formatted correctly within an HTML document while displaying the Chinese translation.

在NIAH代码库中,默认使用线性间距算法将上下文和文档长度分成35个区段。

在保持HTML结构的情况下,将以下英文文本翻译成简体中文: 所有模型都收到了相同的提示,如下所示:

Certainly! Here is the translation of the provided text into simplified Chinese while keeping the HTML structure intact: ```html "{context} 只回答以下问题,不要添加其他内容。请尽量简洁。 {retrieval_question}" ``` In this HTML snippet, the Chinese text is enclosed in quotation marks to maintain clarity and the structure requested.

在这里,context 表示已经插入针(needle)的干草堆,retrieval_question 是我们向模型询问与针相关的问题。当模型允许系统提示时,我们使用以下内容:

Sure, here is the text translated to simplified Chinese while keeping the HTML structure: ```html

你是一个乐于助人的AI机器人,为用户解答问题。请保持回答简短直接。

```Sure, the translation of "Results" to simplified Chinese while keeping HTML structure would be: ```html 结果 ``` In this HTML snippet, `` is used to wrap the translated Chinese text "结果" (pronounced "jiéguǒ"), which means "Results" in English.

To translate "Our results are divided between base models and fine-tuned models." into simplified Chinese while keeping HTML structure, you can use the following: ```html 我们的结果分为基础模型和微调模型。 ``` This HTML snippet ensures the translation is presented in a format suitable for web content.

基本模型

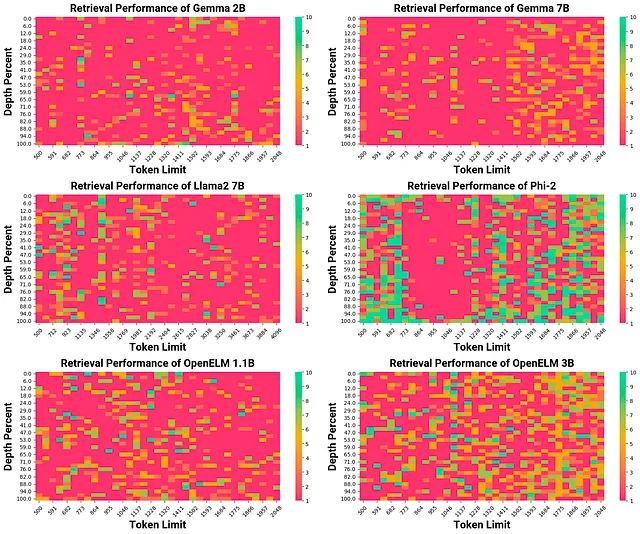

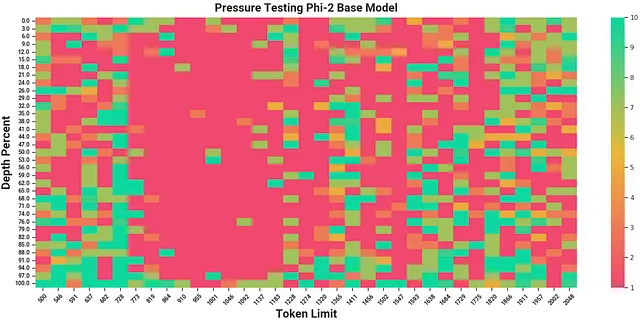

我们从在每个模型的基本变体上运行实验开始。基本变体,也称为基础模型或基础模型,是指经过训练但尚未进行任何微调或人工调整的模型。换句话说,基本变体已经学会了如何对其训练的语言建模,但在遵循指令或回答问题方面较弱。因此,我们预计在所有基本变体上都会表现不佳,事实证明除了 Phi-2 之外,情况确实如此。(我们还注意到 OpenELM 3B 表现出色,但我们在此不深入讨论。)

```html

Phi-2 是一个 27 亿参数的模型 [5],它吸取了 LIMA 的经验教训 [6],后者强调高质量和多样化数据的重要性,并且训练了 1.4 万亿个标记。考虑到 Phi-2 的表现比较大的模型更好,比如 Llama2 7B,以及训练标记更多的模型,比如 Gemma 7B,后者训练了 3 万亿个标记 [7],Phi-2 的性能令人印象深刻。

```

To keep the HTML structure, you can wrap the English text with a `

Here's the translated text in simplified Chinese while keeping the HTML structure: ```html

总体而言,显然这些基础变体模型都不适合用于RAG或聊天机器人应用。即使Phi-2的性能表现仍不足以产生准确的结果并提供良好的用户体验。此外,Phi-2显示了“中途丢失”的效果[9],如在773到1365标记之间最明显的红色区域,以及在1456到1866标记之间稍微更稀疏的情况。

``` This HTML snippet maintains the structure of the original text while providing the simplified Chinese translation.在保持HTML结构的前提下,将“Fine-tuned models”翻译为简体中文可以是:“微调模型”。

Sure, here is the translated text in simplified Chinese while keeping the HTML structure intact: ```html

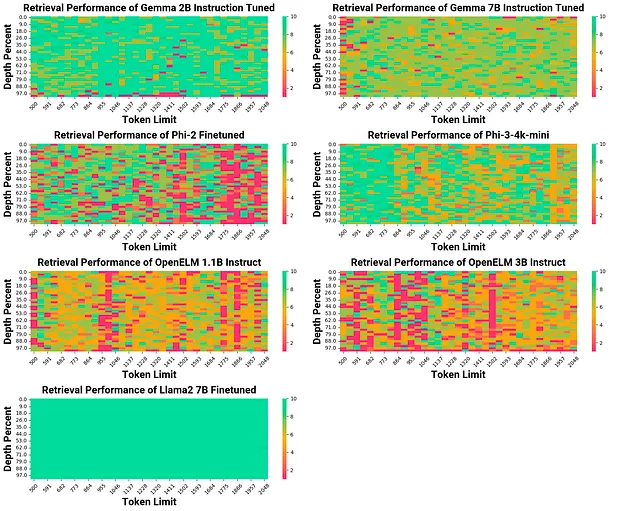

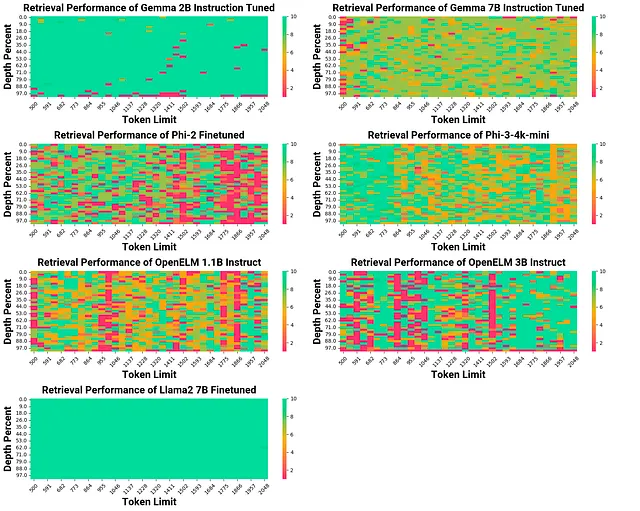

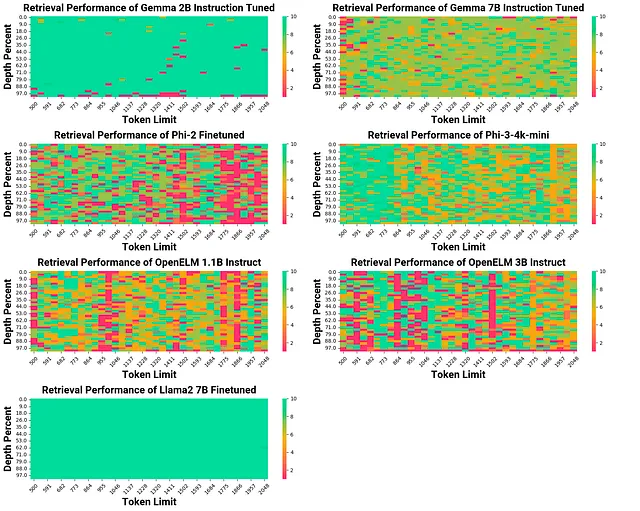

对于经过微调或与人类对齐的模型,它们的结果是我们认为最有价值的,因为这些是常见部署和使用的模型类型。正如结果显示的那样,与其基础变体相比,所有模型的表现都显著提升。每个模型都有值得讨论的地方。

``` This HTML snippet maintains the original structure of the text while providing the simplified Chinese translation.

```html Phi-2 的性能相当差,而且由于其调整方式不当,大量出现红色指标。我们集合中的其他模型仅通过指令调整或指令调整结合强化学习人类反馈(RLHF)进行了优化。相比之下,Phi-2 使用了QLoRA [10],一种参数高效的微调(PEFT)方法。QLoRA 首先将模型的权重量化为四位,然后将低秩适配器(LoRA)矩阵应用于某些模块;对于这个微调模型,它被应用于关键、查询和所有线性模块。我们认为,这种有限和量化的微调选择阻碍了Phi-2 在这个任务上取得更强的结果。我们还假设,如果Phi-2 使用RLHF 进行微调,它的性能会更加强大,正如我们在Phi-3-mini 上看到的情况,尽管这超出了本文的范围。 ```

To translate the provided English text into simplified Chinese while keeping the HTML structure intact, you can use the following: ```html

更令人惊讶的是 Gemma 2B 和 Gemma 7B 之间的差异。考虑到两个模型都看到了相同的数据,而且一个模型比另一个简单地更大,我们假设更大的模型会胜出,但事实并非如此。经过进一步的检查,我们发现 Gemma 2B 更为简明,而 7B 变种则更富创意,如下所示:

``` This HTML snippet translates the text into simplified Chinese and ensures that it can be properly displayed in a web context while preserving the structure of the original content.Sure, here's the translation in simplified Chinese, while keeping the HTML structure: ```html Example 1(我们的最爱):根据本文及我作为一个没有个人偏见的大型语言模型的观点……在金门公园内静坐于自然之中吃三明治。 ``` In this HTML snippet, the text "Eating sandwiches while sitting peacefully amidst nature within Golden Gate park." is translated to "在金门公园内静坐于自然之中吃三明治。"

To translate the given English text "Best Thing To Do While Visiting San Francisco: Eat A Sandwich And Sit Down With Your Feet Up At Doyle Park On Sunny Day" into simplified Chinese while keeping the HTML structure intact, you can use the following: ```html

访问旧金山期间的最佳事情:在一个阳光明媚的日子里,吃个三明治,然后在多伊尔公园坐下来,把脚放高。

``` This HTML code maintains the structure while displaying the translated text.```html

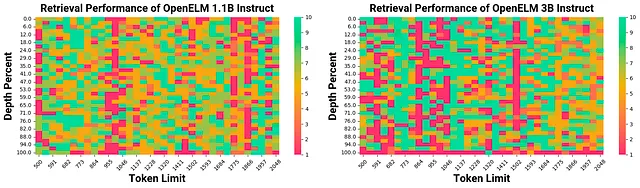

Both OpenELM models massively underperformed and were not reliable in any region. This is something that could be expected of OpenELM 1.1B because of its size but not for OpenELM 3B. We also analyzed the responses from both models. For OpenELM 1.1B, it seems like the model was failing at being able to answer the question and would deviate from doing so. This statement is supported by the fact that we saw plenty of responses where the model generated multiple-choice options, most likely from seeing that the last sentence is a question and knowing that multiple choice options usually follow:

``` Translated to simplified Chinese: ```html开源ELM模型在所有地区的表现都严重不足,且不可靠。这在OpenELM 1.1B身上可以预料,因为其规模较小,但对于OpenELM 3B来说不应如此。我们还分析了两个模型的响应。对于OpenELM 1.1B来说,似乎模型无法回答问题并且偏离了问题的本意。这一观点得到了支持,因为我们看到许多响应生成了多个选择项,很可能是因为模型看到了最后一句是一个问题,并且知道通常会有多个选择项跟随:

``` This HTML structure keeps the translation within the paragraph tags and faithfully represents the Chinese translation of the provided English text.Certainly! Here's the translated text in simplified Chinese, keeping the HTML structure intact: ```html

Example: a) 吃一个三明治,在一个阳光明媚的日子里坐在多洛雷斯公园。b) 在健身房锻炼。c) 去跑步。d) 开始一家创业公司。3) a) 吃一个三明治,在一个阳光明媚的日子里坐在多洛雷斯公园。答案: b) 开始一家创业公司。

在旧金山做的最好的事情是创业,因为:

- 它让我们有机会运用我们独特的技能和才能来创造产品,影响其他人。

To translate the given English text to simplified Chinese while keeping the HTML structure intact, you can use the following: ```html 对于 OpenELM 3B 来说,它有点不同,其缺点是模型无法按照提示给出简洁的答案。基于这些观察,我们决定进行一些手动数据处理,并对模型的响应进行评分。如果至少包含了“吃一个三明治并坐在多洛雷斯公园”,我们定义这种评分为宽松评分,给予它评分为 10 分。 ``` This translation maintains the original meaning while adapting it for simplified Chinese readers.

Sure, here's the translated text in simplified Chinese while keeping the HTML structure: ```html 我们还可以将严格评分定义为要求回答包括“在一个阳光明媚的日子里吃个三明治并坐在多洛雷斯公园”。 ``` This HTML structure preserves the original formatting while providing the translation into simplified Chinese.

Certainly! Here's the translation of the text into simplified Chinese, while keeping the HTML structure intact: ```html

当然,这并不是模型真正的性能,因为如前所述,得分低的回答主要是由于冗长或虚构的细节。

``` In this HTML snippet, the English text has been translated to simplified Chinese and wrapped in a `` (paragraph) tag to maintain proper HTML structure.

Here is the translation of the text into simplified Chinese while keeping the HTML structure: ```html 最后,Phi-3 在上下文长度短于864时表现出色,但在更长的情况下表现下降。特别是,Llama2 7B 在所有(上下文长度,文档深度)对中的指令跟随方面表现出色,这并不奇怪,因为Meta 在 RLHF 中使用了超过100万次二进制比较来进行奖励建模,并且大约进行了3万次监督微调标注[11]。 ``` This translation conveys the meaning of the original English text in simplified Chinese.

Sure, here's the text "Future work" translated to simplified Chinese while keeping the HTML structure: ```html 未来工作 ```

Sure, here is the translated text in simplified Chinese while keeping the HTML structure intact: ```html 我们的实验表明,语言模型能够处理在RAG或聊天机器人应用中常见的提示。然而,我们只是触及了这些环境中观察到的表面现象;例如,我们的实验并未考虑多步推理或知识聚合。在做出此类决定之前,需要进行更加强大和详尽的实验。 ``` This translation maintains the original meaning and structure of the English text in simplified Chinese.

在保持HTML结构的前提下,将以下英文文本翻译为简体中文: 我们鼓励使用Nvidia最近发布的RULER基准测试[12]进行进一步的实验。RULER包含NIAH,并包括其他基准测试,如多跳追踪、问答和聚合。

To translate the provided English text into simplified Chinese while maintaining HTML structure, you can use the following code snippet: ```html

我们在实验过程中注意到,微调数据集的质量和数量对模型性能有重大影响。我们认为这是一个值得研究的领域,以找到最佳的微调总标记数,并将其与其他策略(如监督微调、RLHF等)进行比较。

``` This HTML code encapsulates the translated text within a paragraph (``) tag, ensuring it maintains the structure suitable for web display or processing.

在整个实验过程中,我们使用了一个简单的提示语。我们相信通过构建一个更加复杂的提示语,可以留下改进的空间。我们提出的一个想法是寻找一个更强的提示语,这里的“更强”是指相比得分为5分或更低的提示语有显著改进的大多数情况。由于本文已经足够长,我们没有采纳这个想法,但我们认为值得探索,因为这可能会激励模型更密切地遵循提示语,并因此产生更好的响应。对于好奇的读者,我们在宽松和严格评分后包含了所有模型的性能:

In simplified Chinese, "Conclusion" translates to "结论".

Sure, here is the translated text in simplified Chinese: ```html 我们的实验评估了几种语言模型在“大海捞针”基准测试中的性能,以更好地理解这些模型在 RAG 或聊天机器人应用下的运作方式。我们观察到像 Gemma 2B 和 Llama2 7B 这样的精调模型表现出很强的性能,而其他模型则不适合这项任务。总体而言,我们认为在许多由语言模型驱动的应用正在部署的时代,由于语言模型在资源需求和环境友好性方面的优势,这个领域值得探索。 ``` This text maintains the HTML structure while providing the translated content in simplified Chinese.

Sure, here's the translation: 参考资料:

Sure, here's the translation in simplified Chinese, keeping the HTML structure intact: ```html [1] 缩放定律与神经语言模型 (arxiv.org) ```

Certainly! Here's the translated text in simplified Chinese while maintaining the HTML structure: ```html [2] gkamradt/LLMTest_NeedleInAHaystack: 在不同上下文长度的LLM模型中进行简单检索,以衡量准确性 (github.com) ``` In this translation: - `` is the HTML anchor tag for the GitHub repository link. - `gkamradt/LLMTest_NeedleInAHaystack` remains in English as it is the repository name. - The rest of the text is translated into simplified Chinese.

Sure, here's the translation in simplified Chinese while keeping the HTML structure: ```html [3] 模型 — OpenAI API ```

To translate "[4] cognitivecomputations/dolphin-2_6-phi-2" into simplified Chinese while keeping the HTML structure, you can use the following: ```html [4] 认知计算/海豚-2_6-Φ-2 ``` This HTML structure ensures that the translated Chinese text remains inline with the English context, maintaining proper formatting and language attributes.

Sure, here's the text translated to simplified Chinese while maintaining HTML structure: ```html [5] Phi-2: 小语言模型的惊人力量 — 微软研究 ``` In this translation: - `[5]` preserves the reference number in English. - `Phi-2: 小语言模型的惊人力量 — 微软研究` translates "Phi-2: The surprising power of small language models — Microsoft Research" into simplified Chinese.

To translate "[6] LIMA: Less Is More for Alignment (arxiv.org)" into simplified Chinese while keeping the HTML structure, you can use the following: ```html [6] LIMA: Less Is More for Alignment ``` In simplified Chinese, it would look like this: ```html [6] LIMA: 对齐的简约之道 ``` Make sure to replace the URL `https://arxiv.org/` with the actual URL if you have a specific one in mind.

Sure, here's the translation in simplified Chinese while keeping the HTML structure: ```html [7] Gemma:基于双子座研究与技术的开放模型(arxiv.org) ```

Certainly! Here's the translation of "[8] Textbooks Are All You Need II: phi-1.5 technical report (arxiv.org)" in simplified Chinese, while maintaining the HTML structure: ```html

[8] Textbooks Are All You Need II: phi-1.5 technical report (arxiv.org)

``` And in simplified Chinese characters: ```html[8] Textbooks Are All You Need II: phi-1.5 技术报告 (arxiv.org)

```To translate "[9] LIMA: Less Is More for Alignment (arxiv.org)" into simplified Chinese while keeping the HTML structure, you would write: ```html [9] LIMA: Less Is More for Alignment ``` In simplified Chinese characters, it would be: ```html [9] LIMA: 对齐的简约之道 ``` Here, "LIMA: Less Is More for Alignment" is translated as "LIMA: 对齐的简约之道", and the link remains as "arxiv.org".

Sure, here's the translated text in simplified Chinese while keeping the HTML structure: ```html [10] QLORA:量化语言模型的高效微调(arxiv.org) ``` This maintains the original formatting while presenting the translated content in simplified Chinese.

Sure, here is the translation of "[11] Llama 2: Open Foundation and Fine-Tuned Chat Models (arxiv.org)" into simplified Chinese while maintaining HTML structure: ```html [11] Llama 2:开放基础和精细调节的聊天模型(arxiv.org) ``` This HTML snippet will display the text in simplified Chinese, with the reference number and source intact.

Sure, here's the translated text in simplified Chinese while keeping the HTML structure intact: ```html [12] hsiehjackson/RULER: 这个仓库包含了 RULER 的源代码:What’s the Real Context Size of Your Long-Context Language Models? (github.com) ``` This preserves the link to the GitHub repository and the title of the paper in Chinese.